UK voice recognition breakthrough holds promise for speech …

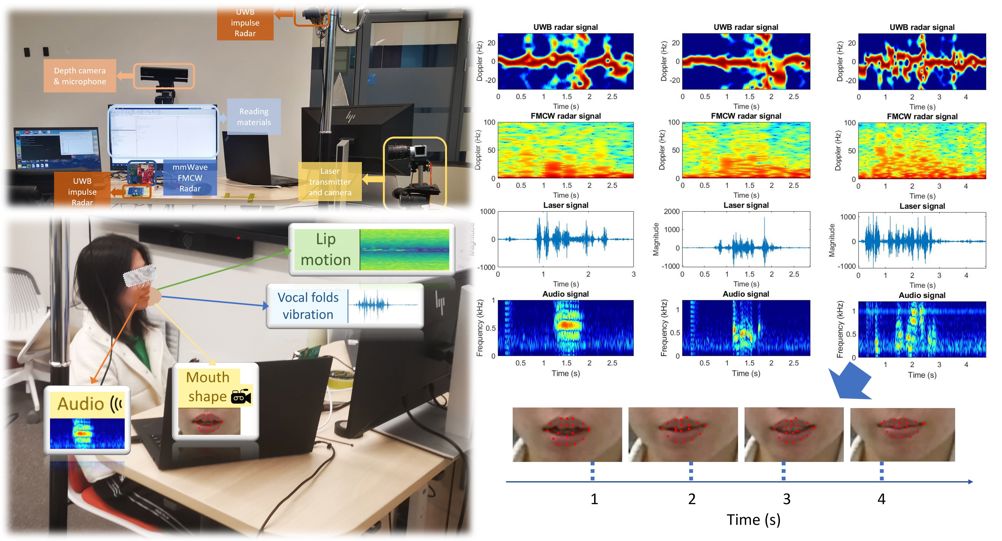

By analysing the physical processes which create the sounds of speech, the team has built a dataset that could underpin the development of speech recognition systems able to read the lips and facial movements of people with speech impairments and provide them with a synthesised voice. To gather their data, the research group - which includes researcher from the University of Dundee and University College London - asked 20 volunteers to speak a series of vowel sounds, single words and entire sentences while complex scans of their facial movements and recordings of their voices were collected. The team then used two different radar technologies - impulse radio ultra-wideband (IR-UWB) and frequency modulated continuous wave (FMCW) - to image the movement of the volunteers' facial skin as they spoke, along with the movements of their tongue and larynx.

Meanwhile, vibrations on the surface of their skin were scanned with a laser speckle detection system, which used a high-speed camera to capture the vibration of emitted laser speckle. A separate Kinect V2camera capable of measuring depth read the deformations of their mouths as they shaped different sounds.

The researchers analysed the facial and lip movements of 20 volunteers - University of Glasgow

The researchers analysed the facial and lip movements of 20 volunteers - University of GlasgowAccording to the research group, the work could enable voice-controlled devices like smartphones to read users' lips as they speak silently; improve the quality of video and phone calls in noisy environments and even help improve security for banking or confidential transactions by analysing users' unique facial movements before unlocking sensitive stored information. Professor Muhammad Imran, leader of the University of Glasgow's Communications, Sensing and Imaging hub, said: "Contactless sensing has huge potential for improving speech recognition and creating new applications in communications, healthcare and digital security.

We're keen to explore in our own research group here at the University of Glasgow how we can build on previous breakthroughs in lip-reading using multi-modal sensors and find new uses everywhere from homes to hospitals."

A paper on the research[1] is published in the journal Scientific Data.

References

- ^ A paper on the research (www.nature.com)